AI revolutionizes automotive safety with 42% of professionals using it for autonomous vehicle design, creating new challenges for meeting ISO 26262 standards.

Drivetech Partners

The automotive industry is undergoing a revolutionary transformation as artificial intelligence becomes deeply integrated into vehicle systems, with 42% of professionals now using AI for autonomous vehicle design. This technological shift brings unprecedented challenges to functional safety assurance, as traditional deterministic safety frameworks must adapt to handle AI's inherently probabilistic nature while still meeting rigorous ISO 26262 safety standards.

Key Takeaways

Safety concerns are paramount in AI-powered vehicle development, cited by 49% of developers as their top priority

ISO 26262 is evolving with specialized extensions to address AI's non-deterministic behaviors in automotive systems

Digital twin technology provides virtual validation environments that significantly reduce development time and enhance safety verification

Adoption of coding standards has reached 86% among automotive teams, with 53% using static analysis tools

Future automotive platforms require multi-layered safety approaches combining continuous monitoring, explainable AI, and real-time metrics

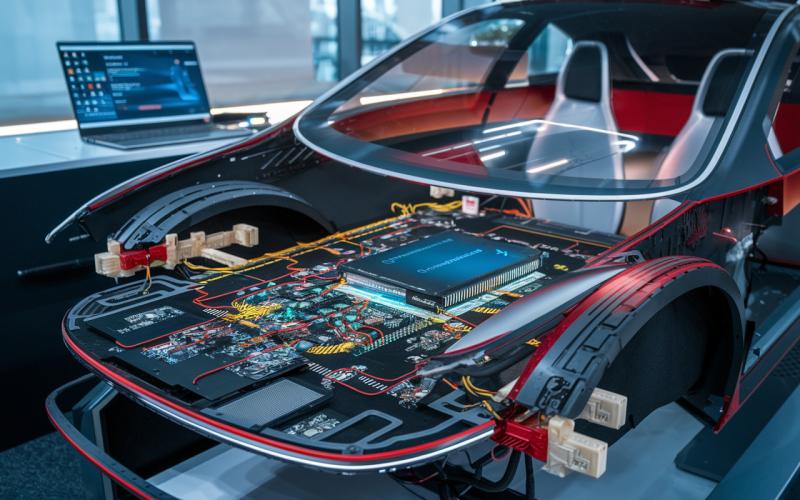

AI Convergence with Automotive Functional Safety

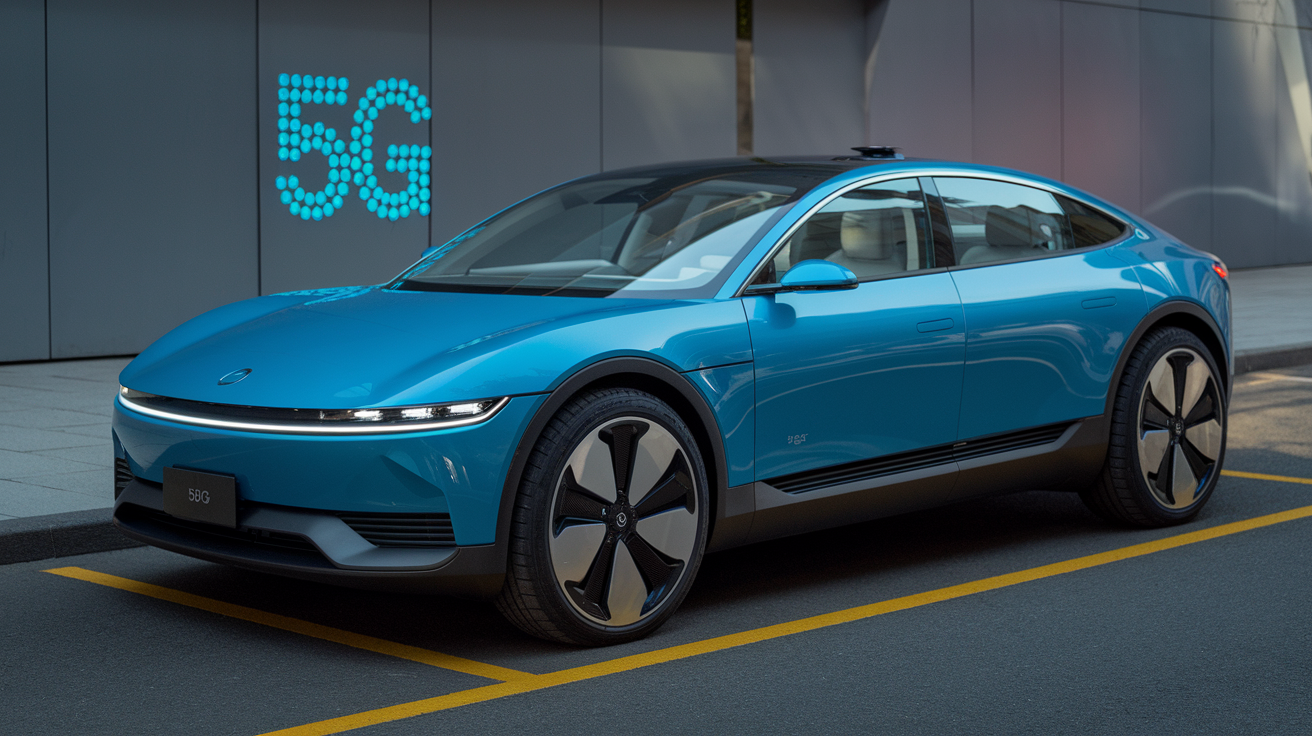

The automotive landscape is changing rapidly with artificial intelligence integration driving innovation in autonomous and connected vehicles. Recent industry data shows that 41% of connected vehicles now incorporate AI in at least some components, with a 9% year-over-year increase in AI adoption for autonomous vehicle design. This shift represents both a tremendous opportunity and a significant challenge for automotive safety engineers.

Advanced Driver Assistance Systems (ADAS), in-vehicle infotainment, and lidar-based perception modules lead the way in AI application areas. These systems deliver enhanced capabilities but introduce complexity that traditional safety frameworks weren't designed to address. For automotive professionals, safety remains the primary concern, with nearly half citing it as their top priority when developing AI-powered systems.

The Evolution of ISO 26262 for AI-Powered Systems

ISO 26262, the international standard for automotive functional safety, is adapting to meet the challenges presented by AI and machine learning systems. The addition of Part 11 specifically addresses semiconductor safety in the context of advanced electronics platforms. Meanwhile, ISO/PAS 8800, focused on AI functional safety assurance, has achieved an impressive 71% adoption rate among automotive professionals.

Traditional verification methods face fundamental challenges when applied to AI systems due to their non-deterministic behaviors, model uncertainty, and data-driven learning processes. This uncertainty conflicts with the deterministic nature of conventional safety standards, pushing the industry to develop new approaches. Explainability and transparency in AI models have become essential requirements for both safety assessment and regulatory compliance.

AI-based Hazard Analysis and Risk Assessment (HARA) tools now provide automated, real-time hazard identification, enhancing the traditional safety analysis process with data-driven insights. These tools improve the accuracy of severity and controllability evaluations, leading to more effective risk mitigation strategies.

Addressing Code Complexity and Non-Determinism

Modern automotive systems with AI components require thousands of simulation runs for proper validation, especially in high-level automated driving scenarios. The sheer complexity of these systems presents unprecedented challenges for safety engineers accustomed to deterministic software architectures. Traditional approaches struggle with AI's probabilistic reasoning and data-dependent outcomes, requiring new frameworks for safety assurance.

Several strategies have emerged to mitigate these challenges:

Development of new safety metrics specifically designed for AI decision-making processes

Implementation of continuous verification cycles that enhance model robustness

Integration of explainable AI (XAI) modules to increase transparency and traceability

Leveraging AI-powered HARA for more accurate risk assessments

Utilizing continuous learning capabilities that leverage big data for enhanced safety assurance

Quality Assurance Through Coding Standards and Testing

The pursuit of software quality has become increasingly systematic in automotive development, with 86% of teams now using at least one formal coding standard. Static analysis and SAST (Static Application Security Testing) tools have gained traction, with 53% of teams employing these technologies to detect potential issues before runtime. Additionally, 89% of teams track code quality metrics, marking a significant 12% increase from the previous year.

MISRA C, AUTOSAR C++, and the upcoming MISRA C:2025 have established themselves as industry benchmarks, with 53% of developers anticipating MISRA C:2025's impact on their development practices. Improved quality stands out as the main motivation for static analysis tool adoption, cited by 30% of developers.

Successful strategies for ensuring software quality include:

Well-defined coding standards that reduce defects and increase maintainability

Automated test generation coupled with comprehensive requirements traceability

Continuous integration pipelines that enforce software quality gates

Regular code reviews and automated static analysis checks

Comprehensive test coverage metrics and reporting

Digital Twins: Transforming Safety Validation

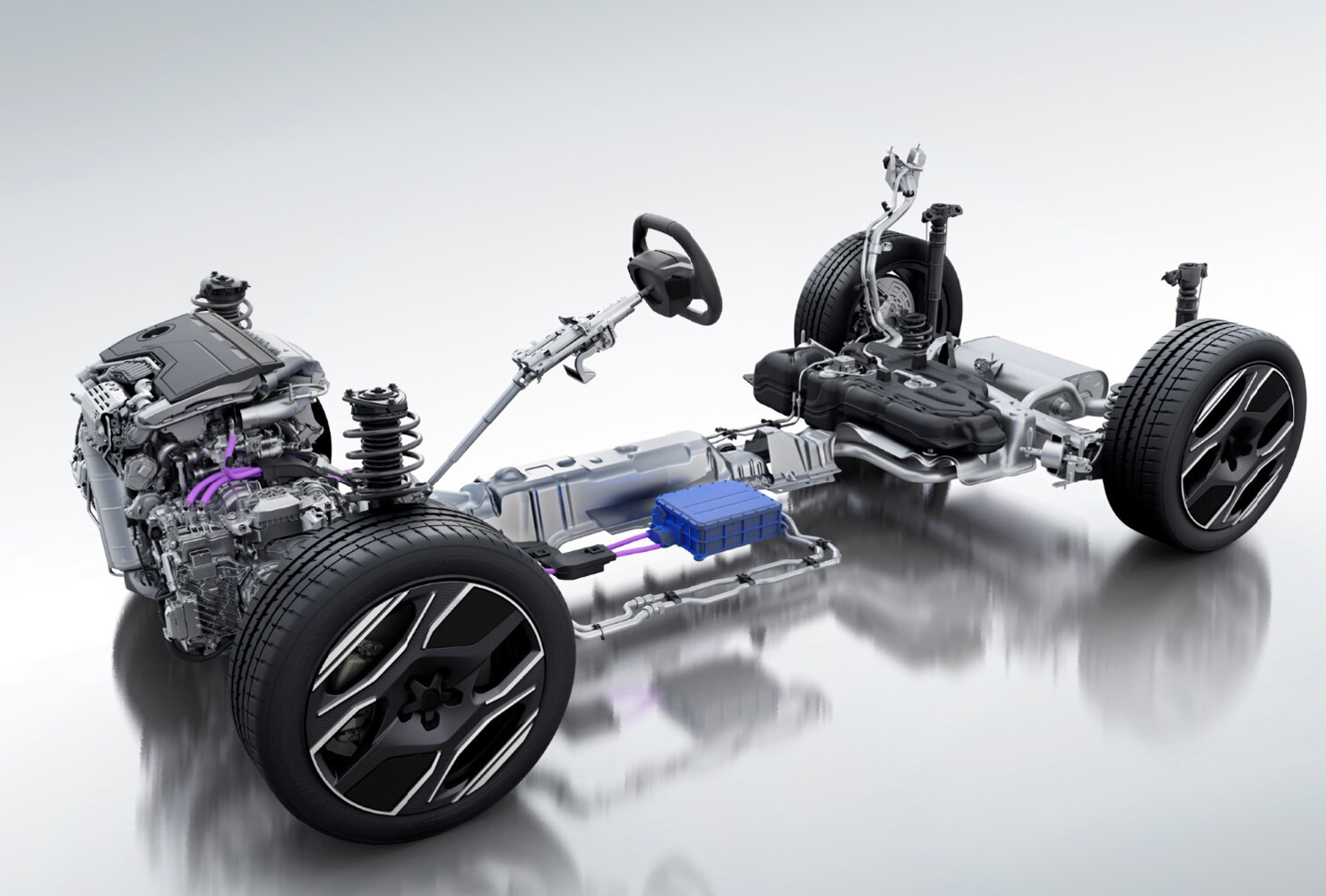

Digital twin technology is revolutionizing automotive development by creating virtual representations of physical vehicles, components, and manufacturing processes. These sophisticated virtual models enable real-time monitoring, predictive maintenance, and performance optimization without the physical limitations of traditional testing.

AI and machine learning analysis of digital twin data provides unprecedented capabilities to detect patterns, predict failures, and enhance safety prognostics. Cloud-based simulation environments allow for parallelized, scalable testing, dramatically reducing analysis time for complex autonomous systems.

The integration of digital twins with augmented reality and blockchain technologies further enhances traceability, transparency, and data security. This convergence creates a comprehensive platform for validating AI-driven systems against safety requirements while maintaining clear documentation of test results for certification purposes.

Challenges of Safety Assurance for AI-Based Systems

The explosion in software complexity accompanying AI integration presents fundamental challenges to traditional safety certification approaches. Non-deterministic AI behavior—where the same input can produce different outputs based on learned patterns—conflicts with the deterministic nature of conventional safety frameworks.

Organizations must develop new methodologies to guarantee safety in probabilistic decision-making contexts. This requires a combination of robust testing protocols, continuous monitoring systems, and carefully designed fallback safety mechanisms that engage when AI encounters edge cases or uncertain situations.

Perhaps the most significant challenge facing automotive developers is balancing innovation speed with thorough safety validation. Market pressures drive rapid development cycles, while safety considerations demand extensive testing and verification. Finding the right balance is crucial for delivering competitive yet safe AI-powered vehicles.

Implementing Multi-Layered Safety Assurance

A comprehensive approach to AI safety in automotive systems requires organization-wide expertise in functional safety standards. Continuous training on ISO 26262 and its extensions has become essential for maintaining compliance as these standards evolve to address AI technologies.

AI explainability frameworks and monitoring tools directly address non-deterministic behaviors by providing insight into decision-making processes. Digital twins and cloud-based simulation enable comprehensive validation of autonomous features across thousands of scenarios that would be impractical to test physically.

Collaboration with regulatory bodies helps influence evolving standards like ISO/PAS 8800, ensuring they're both practical to implement and effective at ensuring safety. The most successful approaches combine multiple safety layers:

Rigorous coding standards and automated analysis

Comprehensive simulation in virtual environments

Runtime monitoring and verification systems

Clear fallback mechanisms for edge cases

Continuous learning from field performance data

Future Directions: Redefining Safety for Next-Generation Platforms

The automotive industry is moving toward standardized frameworks specifically designed for AI safety validation. These frameworks will likely incorporate increased focus on runtime monitoring and verification of AI systems in production vehicles, enabling continuous safety assessment throughout a vehicle's lifecycle.

Development of specialized hardware accelerators for safety-critical AI applications will help address the computational demands of these systems while maintaining deterministic performance characteristics. The integration of cybersecurity considerations with functional safety requirements acknowledges that modern vehicles must be protected against both technical failures and malicious attacks.

Collaborative industry initiatives are establishing common safety metrics and benchmarks for AI systems, promoting standardization while allowing for innovation. These efforts, combined with advancing regulatory frameworks, are creating a more structured approach to ensuring AI can be safely integrated into the vehicles of tomorrow.

Sources

Automotive Testing Technology International: Report reveals shift toward safety and AI in automotive software engineering

eenewseurope: 2025 automotive report highlights code quality, AI and safety

SemiEngineering: Functional Safety Insights For Today's Automotive Industry

Synopsys: 5 Automotive Functional Safety Insights

LDRA: How AI Impacts the Qualification of Safety-Critical Automotive Software

Mobility Engineering Tech: Complexity of Autonomous-Systems Simulation