Discover how accuracy, diversity and complexity in AI datasets drive superior performance, with synthetic data generation producing thousands of test cases in minutes.

Drivetech Partners

The quality of datasets used in AI test generation can make or break the performance of artificial intelligence systems. Research consistently shows that higher-quality training data leads to better-performing AI models, with larger and more diverse data pools yielding superior results when curated thoughtfully.

Key Takeaways

Three critical qualities determine dataset effectiveness: accuracy, diversity, and complexity - without these, AI tests become unreliable

Synthetic data generation can produce thousands of test cases in minutes compared to weeks of manual creation

Dataset diversity goes beyond bias prevention - it fundamentally improves performance by helping models handle unknown scenarios

Rigorous filtering using multi-stage quality checks ensures only high-quality data makes it into final test datasets

Privacy-preserving techniques allow organizations to generate realistic yet anonymous test data that maintains utility while protecting sensitive information

The Foundation of AI Test Generation: Quality Datasets

High-quality datasets serve as the essential foundation for effective AI test generation. Research has consistently demonstrated that larger data pools yield superior results - studies show that when curating from 600B, 360B, or 180B tokens to a target size of 60B tokens, models trained on datasets curated from larger pools consistently performed better.

Three critical characteristics determine data quality: accuracy (factual correctness), diversity (covering various use cases), and complexity (detailed answers that demonstrate reasoning). These qualities aren't optional but necessary for AI systems that can handle real-world complexity.

Dataset curation isn't random selection but involves systematic mixing of data sources in optimal proportions. Multiple research teams including Liu et al. (2024), Ye et al. (2024), and Ge et al. (2024) have demonstrated the value of this approach. Without thoughtful curation, even massive datasets can fail to deliver meaningful results.

The Evolution of Synthetic Data Generation for AI Testing

Synthetic data generation has transformed testing by enabling the creation of high-quality test cases in minutes rather than weeks. This efficiency is particularly valuable when real data is scarce, sensitive, or insufficient for comprehensive testing.

Distillation and Self-Improvement techniques represent the primary methods for generating synthetic datasets. These approaches allow AI systems to learn from their own outputs, gradually refining generated data to better match desired characteristics.

Implementation tools like Faker.js allow testers to generate synthetic data for various scenarios, significantly streamlining testing workflows. For teams with limited resources, these tools offer an accessible entry point into synthetic data generation.

Data augmentation creates new variations of existing data to improve diversity and quality of datasets. This technique is particularly useful when working with limited original data, as it can multiply the effective size and variety of training examples.

Diverse Datasets: The Key to Unbiased and Generalizable AI Tests

A growing body of evidence highlights the risk of algorithmic bias, which can perpetuate existing inequities in applications ranging from healthcare to finance. This bias often stems directly from limitations in the training datasets used.

Dataset diversity offers benefits beyond preventing bias - it fundamentally improves algorithmic performance by helping models generalize to unseen cases. An AI system trained on narrow data may appear competent during limited testing but fail catastrophically when deployed to diverse real-world scenarios.

Several challenges contribute to dataset bias: systematic inequalities in dataset curation, unequal opportunity to participate in research, and inequalities of access. Addressing these requires intentional effort throughout the data collection process.

The STANDING Together initiative aims to develop standards for transparency of data diversity in health datasets. This type of industry collaboration represents an important step toward more accountable AI development practices.

A fundamental issue in AI development is that datasets often aren't fully representative of populations or phenomena being modeled, creating significant divergence from real-world conditions. Sampling bias occurs when data is collected from non-randomized sources like online questionnaires or social media, creating non-generalizable datasets.

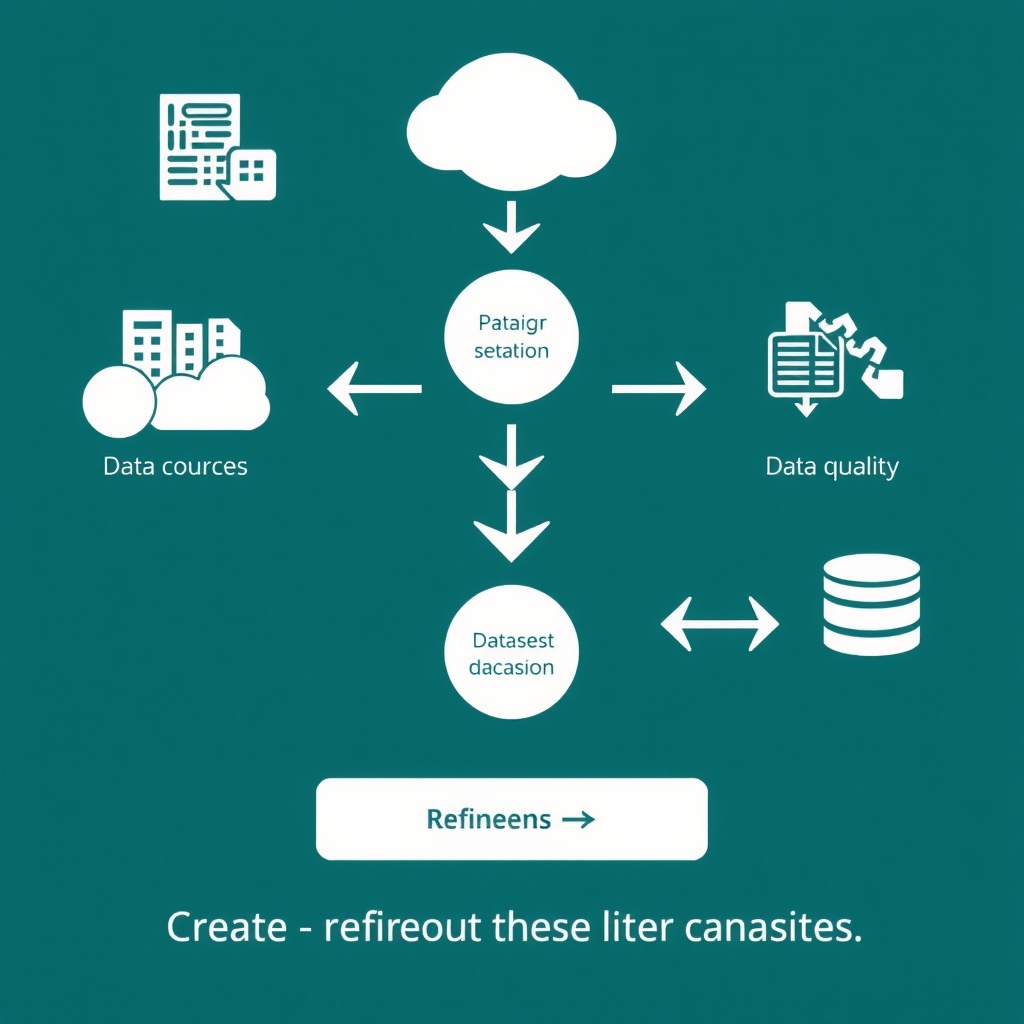

Ensuring Data Quality Through Rigorous Filtering and Validation

Effective dataset creation employs two-stage filtering: quality checks during both context generation and the generation of synthetic inputs from these contexts. This multi-layered approach helps catch issues that might slip through a single filter.

Eight foundational guidelines guide filtering contexts: clarity, depth, structure, relevance, precision, novelty, conciseness, and impact. Each criterion helps ensure that every included example contributes meaningfully to the dataset's overall quality.

Employing LLMs as judges has proven to be a robust method for identifying and eliminating low-quality contexts. These AI-powered filters can evaluate massive amounts of potential training data much faster than human reviewers alone.

Quality filtering helps ensure no valuable resources are wasted and that the final dataset only contains high-quality "goldens" - examples that truly represent ideal performance. This careful curation pays dividends in model performance downstream.

Rigorous experimental design, hypothesis generation, and hypothesis testing are often downplayed in AI projects, contributing to confirmation bias. Construct validity is particularly important - AI teams should demonstrate that applications measure what they intend to measure.

Leveraging Multiple Data Sources for Comprehensive Test Coverage

Real user data represents one of the best testing sources when a product is live. Collecting actual user interactions, especially cases where the AI made mistakes, provides authentic test cases that reflect genuine usage patterns.

Historical logs enable valuable backtesting capability - testing how a new AI would have performed on past inputs compared to previous versions. This direct comparison helps quantify improvements and identify potential regressions.

Public benchmarks provide open datasets designed to compare AI systems across predefined test cases. These are particularly useful for specific domains (e.g., MBPP for coding tasks) where standardized evaluation allows for meaningful comparisons between different approaches.

When real data isn't available or sufficient, creating test datasets from scratch using LLMs offers a practical alternative. This synthetic generation approach can fill specific gaps in test coverage that might otherwise remain unaddressed.

In a real-world implementation, Segment built an LLM-powered audience builder and tested it using real queries that users had previously constructed. This approach ensured their system was evaluated against actual user behavior rather than hypothetical scenarios.

Privacy-Preserving Approaches to AI Test Data Management

Synthetic data generators like Mostly AI and Tonic.ai offer solutions that preserve privacy while maintaining data utility. These tools have become increasingly sophisticated, allowing organizations to generate realistic test data without exposing sensitive information.

Tonic.ai provides automated data provisioning with isolated test datasets that are fully representative of production data but privacy-safe. This capability is particularly valuable for organizations working with highly regulated data like healthcare or financial records.

Advanced tools can identify sensitive data types and realistically mask or synthesize new values while maintaining consistency and relationships across databases. This ensures that testing remains meaningful even when using synthetic rather than original data.

Privacy-preserving synthetic data enables organizations to leverage unstructured data in AI development while safeguarding against leaks and ensuring regulatory compliance. This balancing act between utility and protection is essential for responsible AI development.

Looking forward, NIST plans to work with trustworthy and responsible AI communities to explore mitigants and governance processes for bias. These collaborative efforts aim to develop standardized approaches that can be widely adopted across the industry.

Measuring Success: Evaluation Metrics for Test Dataset Effectiveness

Automatic testing scores system outputs in each iteration using various LLM evaluation techniques. This approach provides consistent feedback throughout development, allowing teams to track progress objectively.

Using a fixed set of test cases helps track progress across experiments such as prompt tweaking or model changes. This consistency enables direct comparison between different approaches, making it clear which changes actually improve performance.

An evaluation or test dataset consists of expected inputs and optional expected outputs representing real-world use cases. For customer support chatbots, test datasets include common user questions along with ideal responses that demonstrate desired system behavior.

Tools like Evidently Cloud provide repeatable evaluations for complex systems like RAG and agents to enable quick iteration and confident deployment. These evaluation platforms streamline the process of assessing model performance across multiple dimensions.

The Future of Dataset Curation for AI Test Generation

AI-driven test data generation increasingly leverages machine learning algorithms to create realistic and diverse datasets that mimic real-world scenarios. This self-improving cycle allows testing data to evolve alongside the systems being tested.

The integrity and relevance of input data fundamentally determine the reliability and value of AI-generated tests. As AI capabilities advance, the quality requirements for testing data will only increase.

Balancing comprehensive testing with privacy concerns requires innovative approaches. Organizations must find ways to thoroughly test their systems while respecting user rights and maintaining regulatory compliance.

Continuous dataset evolution and refinement is necessary as AI systems and their applications evolve. Static datasets quickly become outdated as user behaviors change and new edge cases emerge in real-world deployments.

Organizations increasingly recognize high-quality test datasets as a competitive advantage in AI development. Those who invest in superior data curation and testing methodologies can deliver more reliable, fair, and effective AI systems to their users.

Sources

mostly.ai

tonic.ai

github.com/mlabonne/llm-datasets

docs.ragas.io/en/stable/concepts/test_data_generation

blog.nashtechglobal.com/getting-started-with-ai-driven-test-data-generation-tools-and-techniques