AI-powered microcontrollers bring neural processing to edge devices, enabling complex vision and voice tasks with 600x performance gains and reduced power consumption.

Drivetech Partners

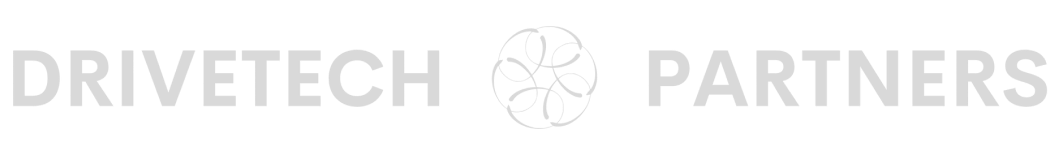

Modern microcontroller technology is undergoing a revolutionary transformation with the integration of dedicated neural processing units, enabling AI capabilities previously unimaginable in small, battery-powered devices. These NPU-accelerated MCUs combine specialized hardware architecture with optimized software frameworks to deliver exponential performance gains while dramatically reducing power consumption, fundamentally changing what's possible at the edge of computing networks.

Key Takeaways

Hybrid CPU/NPU architectures deliver up to 600x performance improvements over traditional microcontrollers while reducing energy consumption by up to 90%

On-device AI processing eliminates cloud dependency, providing enhanced privacy and security while reducing latency for time-sensitive applications

Advanced memory technologies and multi-core designs work alongside NPUs to prevent bottlenecks and enable simultaneous processing

Battery-powered IoT devices can now perform complex vision and voice recognition tasks that previously required high-power processors

The combination of AI acceleration and energy efficiency is reshaping design paradigms across consumer and industrial IoT applications

The Revolution of Neural Processing Units in MCUs

Neural Processing Units represent a fundamental shift in microcontroller architecture, bringing specialized AI acceleration to the smallest computing devices. Unlike traditional CPUs that process instructions sequentially, NPUs are designed specifically to handle the parallel computations and matrix operations that form the backbone of machine learning workloads. This specialized architecture delivers orders of magnitude better performance for AI tasks while consuming a fraction of the power.

The hybrid CPU/NPU architecture has emerged as the optimal solution for edge AI applications. This approach pairs a high-performance CPU core (such as an Arm Cortex-M55 or M85) with a dedicated NPU (like the Ethos-U55) on the same silicon. The CPU handles general-purpose computing tasks while offloading neural network operations to the NPU, creating a division of labor that maximizes both performance and power efficiency.

These integrated solutions eliminate the traditional dependency on cloud services for AI processing. By performing inference locally on the device, they minimize latency and ensure privacy while maintaining the power efficiency needed for battery-operated devices. The specialized processors are specifically optimized for the neural network workloads that power modern voice recognition, computer vision, and sensor analytics applications.

Benchmarking Next-Generation AI Microcontrollers

The performance gains achieved by NPU-accelerated MCUs are nothing short of remarkable. STMicroelectronics' STM32N6 microcontroller with its proprietary Neural-ART Accelerator delivers up to 600x machine-learning performance compared to previous high-end STM32 MCUs. This quantum leap enables complex AI workloads on devices that would previously have required far more powerful processors.

Similarly impressive is the Arm Cortex-M55 paired with the Ethos-U55 NPU, which achieves up to 480x improvement in ML performance with a concurrent 90% reduction in energy consumption compared to standard Cortex-M systems. What makes this even more remarkable is that NPUs like the Ethos-U55 deliver this efficient AI acceleration in an area as small as 0.1 mm² - making them suitable for integration into the smallest microcontrollers.

Other notable products pushing the boundaries of edge AI include the NXP MCX54x series with Neutron NPU and Texas Instruments' TMS320F28P55x with integrated AI acceleration. These performance gains allow battery-powered IoT devices to handle AI workloads that were previously impossible without connecting to high-power processors or cloud resources.

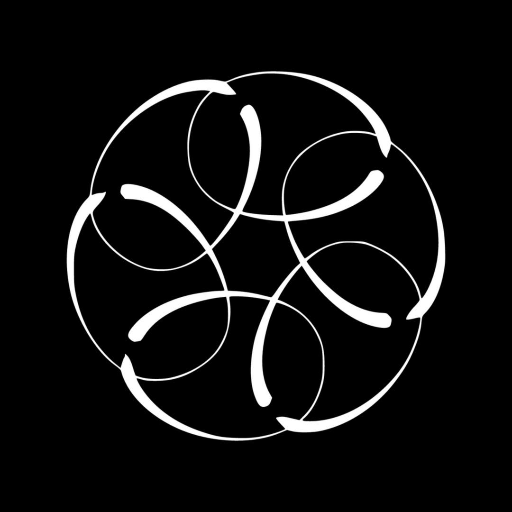

Hardware Innovations Enabling On-Device AI

Multi-core and high-frequency designs work in conjunction with embedded NPUs to enable simultaneous processing of AI and traditional workloads. The latest Cortex-M85 cores can reach frequencies that complement the parallel processing capabilities of attached NPUs, creating a system that can smoothly handle both computational tasks and neural network inference.

Advanced memory architectures represent another critical innovation. Optimized SRAM, DRAM, and Flash memory coupled with direct memory access (DMA) capabilities enable rapid processing of neural network weights without creating bottlenecks. This memory optimization is crucial because neural networks often require significant memory bandwidth to function efficiently.

Many modern AI-accelerated MCUs also include co-integrated Image Signal Processors (ISPs) that offload pre-processing from the NPU and CPU. This approach increases efficiency for vision tasks by handling tasks like image normalization before the data reaches the neural network. These hardware advances are complemented by unified toolchains and optimization software that simplify model deployment and maximize on-device performance.

AI Applications Transforming Edge Devices

Computer vision applications have seen perhaps the most dramatic transformation. Object detection and classification tasks that once required powerful GPUs can now run on battery-powered devices. Lightweight versions of popular neural network architectures like YOLO from Ultralytics can be optimized to run efficiently on NPU-accelerated microcontrollers, enabling applications from smart security systems to autonomous robots.

Voice and sound analysis with speech recognition capabilities offer private, low-latency interactions without cloud dependency. This enables secure voice assistants and audio monitoring systems that maintain user privacy by processing all data locally. The reduced latency also creates a more responsive user experience compared to cloud-based solutions.

NPU-accelerated MCUs excel at sensor fusion, predictive maintenance, and real-time control systems. These capabilities are transforming applications in:

Smart home devices with intelligent environmental monitoring

Industrial IoT systems that can predict equipment failures before they occur

Robotics platforms with enhanced situational awareness

Medical devices with improved diagnostic capabilities

This broad application support is possible because NPUs typically include comprehensive operator libraries covering convolution, pooling, activation functions, and RNN/LSTM operations - essentially most ML operations needed in embedded AI deployments.

Privacy and Security Advantages of Local AI Processing

On-device inference eliminates the need to transmit sensitive data to cloud services, substantially enhancing privacy and data sovereignty. This local processing approach keeps potentially sensitive information (like facial recognition data or voice recordings) contained within the device, addressing growing consumer and regulatory concerns about data privacy.

The security benefits extend beyond privacy. Local processing reduces the attack surface for potential data breaches and interception by minimizing data transmission. This approach also ensures continuous operation even when network connectivity is unavailable or unreliable - a critical advantage in applications like medical devices, security systems, and industrial controls.

From a business perspective, reduced cloud dependency lowers operational costs and eliminates subscription requirements for many applications. This can significantly reduce the total cost of ownership for IoT deployments while simplifying compliance with regional data protection regulations.

Energy Efficiency Breakthrough for Battery-Powered Devices

NPU-accelerated MCUs achieve dramatically lower power consumption per inference compared to CPU-only solutions. This efficiency translates directly into extended battery life for always-on AI applications in wearables, sensors, and portable devices - in some cases extending operational time from hours to days or even weeks.

Specialized power management features further enhance energy efficiency. These include sophisticated sleep modes and activity-triggered wake capabilities that allow devices to remain in ultra-low-power states until specific conditions trigger AI processing. This approach is particularly valuable for environmental monitoring applications where devices might need to analyze sensor data only when certain threshold conditions are met.

The combination of efficient hardware-software co-design reduces overall system power requirements, enabling new categories of AI-capable devices that can operate for extended periods on small batteries or even energy harvesting technologies. This breakthrough opens new possibilities for deploying intelligent sensors in remote locations or inside sealed enclosures where battery replacement is impractical.

Real-World Implementation Challenges and Solutions

Despite their advantages, implementing AI on microcontrollers presents unique challenges. Memory constraints represent one of the most significant hurdles, requiring model optimization techniques including quantization (reducing numerical precision), pruning (removing unnecessary connections), and selecting efficient architecture variations specifically designed for resource-constrained environments.

Development ecosystems now provide specialized tools for converting and optimizing models from popular frameworks like TensorFlow and PyTorch to run efficiently on embedded NPUs. These tools automatically handle the complex process of adapting models designed for cloud environments to work within the constraints of microcontroller hardware.

Hardware-aware training techniques have also emerged as a crucial approach to maximize accuracy within NPU constraints. By incorporating knowledge of the target hardware's capabilities during the training process, developers can create models that maintain high accuracy despite quantization and other optimizations. Best practices for partitioning workloads between NPU and CPU components further maximize overall system performance.

Future Directions and Industry Impact

NPU-accelerated MCUs are reshaping design paradigms across consumer and industrial markets. The ability to perform complex AI tasks on small, low-power devices is enabling new product categories and enhancing existing ones with intelligent decision-making capabilities. This shift is creating entirely new use cases, particularly in areas requiring always-on vision processing, secure voice assistants, and advanced predictive analytics.

The industry is witnessing rapid evolution of specialized AI hardware accelerators for specific domains. Rather than general-purpose NPUs, we're seeing the emergence of accelerators optimized specifically for vision tasks, audio processing, or sensor fusion. This specialization further improves efficiency for targeted applications.

Perhaps most significantly, we're observing the convergence of AI and traditional real-time control systems. This combination enables more autonomous and intelligent edge devices that can make sophisticated decisions locally while maintaining the deterministic performance needed for control applications. As this technology matures, it promises to fundamentally transform how we design and deploy intelligent systems across virtually every industry.

Sources

Edge AI Vision - STMicroelectronics to Boost AI at the Edge with New NPU-Accelerated STM32 Microcontrollers

WikiChip Fuse - Arm Launches the Cortex-M55 and Its MicroNPU Companion, the Ethos-U55

Arm Ethos-U Processor Series Brief