Dell unveils cost-effective on-premises AI infrastructure with NVIDIA Blackwell Ultra GPUs, offering 62% savings vs. cloud solutions while addressing security and data sovereignty concerns.

Drivetech Partners

Dell Technologies has revolutionized enterprise AI infrastructure with the latest advancements to its AI Factory announced at Dell World 2025, offering powerful on-premises solutions that provide up to 62% cost savings compared to public cloud alternatives. These next-generation solutions feature groundbreaking innovations including NVIDIA Blackwell Ultra GPUs, record-breaking storage performance with Project Lightning, and on-premises deployments of leading AI models that address critical enterprise requirements for performance, security, and data sovereignty.

Key Takeaways

Dell's AI Factory delivers 62% lower costs for LLM inferencing compared to public cloud options, addressing both economic and security concerns

New PowerEdge servers feature NVIDIA Blackwell Ultra GPUs with up to 256 GPUs per rack, enabling 4x faster LLM training

Project Lightning achieves 97% network utilization with its parallel file system for PowerScale storage, breaking performance barriers for AI workloads

First-of-its-kind on-premises deployment options for Cohere North, Google Gemini, and Meta's Llama 4 models provide data sovereignty

Dell's comprehensive approach spans client devices, data centers, and edge locations, creating a seamless AI implementation ecosystem

Dell's AI Factory: Addressing Critical Enterprise AI Needs

Dell Technologies has firmly established itself as the world's top provider of AI infrastructure with the latest updates to its AI Factory approach. This comes at a pivotal moment when 75% of organizations now consider AI essential to their strategy, and 65% are actively moving AI projects into production environments.

The company's comprehensive approach to AI infrastructure delivers up to 62% lower costs for large language model (LLM) inferencing compared to public cloud alternatives. This cost advantage, combined with enhanced performance, security, and data sovereignty capabilities, has already attracted over 3,000 global customers accelerating their AI initiatives with Dell's solutions.

What sets Dell's approach apart is its focus on solving the primary challenges enterprises face when implementing AI at scale: cost-effectiveness, performance optimization, security concerns, and regulatory compliance requirements. By providing on-premises solutions that address these challenges, Dell is removing significant barriers to AI adoption across industries.

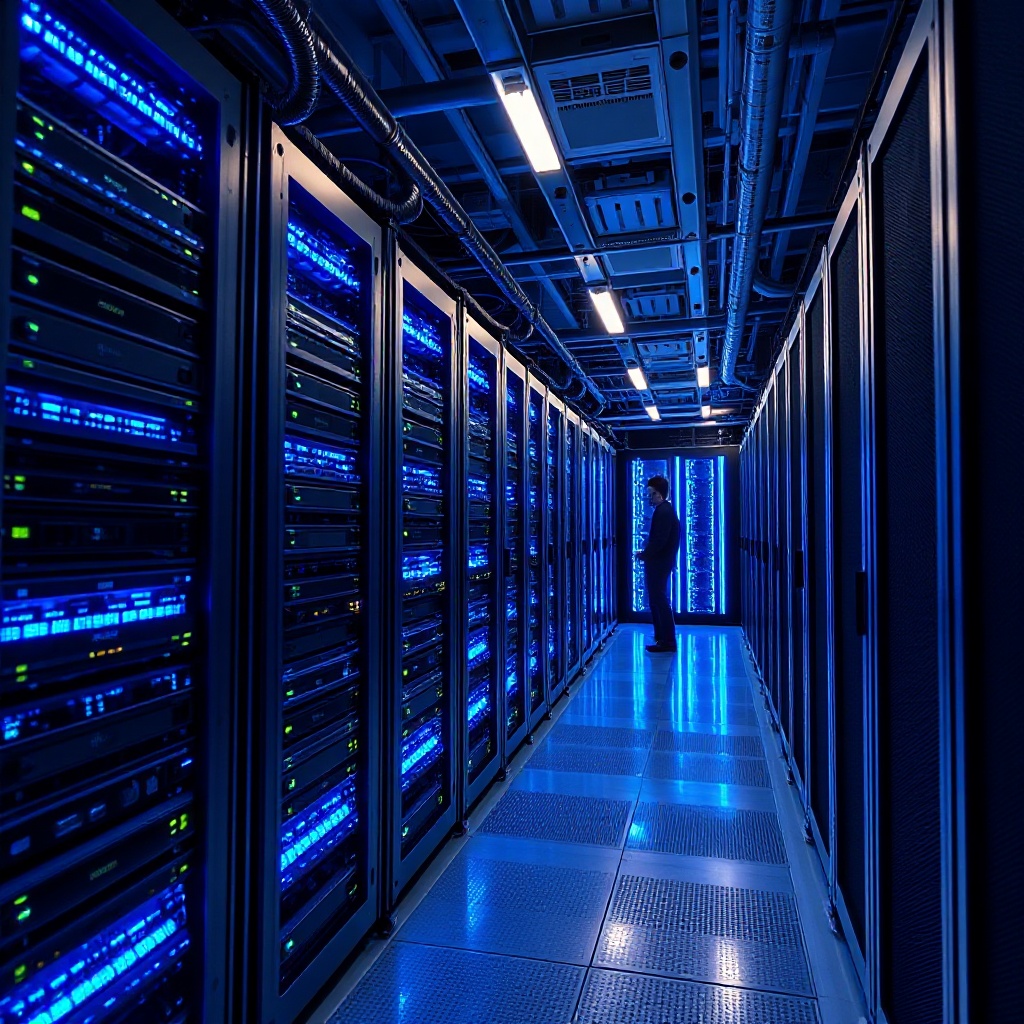

Groundbreaking NVIDIA Collaboration Brings Blackwell Ultra Power

At the heart of Dell's AI Factory advancements is a strengthened collaboration with NVIDIA, introducing next-generation PowerEdge servers designed specifically for extreme AI workloads. The new Dell PowerEdge XE9780 and XE9785 servers deliver unprecedented compute density with up to 192 NVIDIA Blackwell Ultra GPUs, expandable to 256 per Dell IR7000 rack.

These systems come in both air-cooled and liquid-cooled configurations, accommodating different deployment environments while enabling LLM training up to four times faster than previous systems. The 8-way NVIDIA HGX B300 architecture provides unprecedented computational power for the most demanding enterprise AI applications.

Direct integration of the NVIDIA AI Enterprise software platform streamlines deployment and management, creating a complete solution stack that's optimized for performance. This deep integration addresses the complexity of managing sophisticated AI environments, making these powerful systems more accessible to organizations without specialized expertise.

Project Lightning: Breaking Storage Barriers for AI Workloads

One of the most significant innovations announced is Project Lightning, which introduces a parallel file system for Dell PowerScale storage arrays specifically designed for AI workloads. This breakthrough achieves near line-rate efficiency with 97% network utilization – a critical advancement for data-intensive AI applications.

The new storage architecture enables thousands of GPUs to be fully saturated with data, breaking performance barriers that have traditionally limited AI system capabilities. This addresses a fundamental bottleneck in AI infrastructure: keeping powerful GPUs fed with the massive datasets required for training and inference.

Project Lightning provides industry-leading performance for AI workloads at enterprise scale with optimized data access patterns. This means organizations can:

Process larger datasets without performance degradation

Run more complex models without storage bottlenecks

Achieve faster time-to-results for AI training jobs

Scale their AI infrastructure more efficiently

Reduce overall system complexity

On-Premises AI Models: Power Without Compromise

Dell now offers secure on-premises deployment options for major AI foundation models, addressing growing data sovereignty concerns across industries. This first-of-its-kind approach allows enterprises to leverage powerful AI models while maintaining complete control over sensitive data.

The supported models include:

Cohere North: Enables integration with varied data sources and workflow control for intelligent, autonomous enterprise applications

Google Gemini: Deployed on Dell PowerEdge XE9680 and XE9780 servers, bringing foundation model capabilities directly to customer data centers

Meta's Llama 4: Available via Dell AI Solutions, ideal for prototyping and building agent-based enterprise applications

Additional partnerships with Glean (providing the first on-premises deployment for enterprise search) and Mistral AI (offering secure, customizable knowledge management) further expand the options available to enterprises looking to keep AI workloads on-site.

End-to-End AI Deployment: From Client to Edge

Dell's comprehensive approach to AI deployment spans the entire computing spectrum, creating a seamless experience across environments. This unified approach includes:

Client Devices: AI PCs with dedicated neural processing units enable local AI workloads without cloud dependence

Data Center: High-density, liquid-cooled PowerEdge servers power large-scale AI training and inferencing workloads

Edge Locations: Dell NativeEdge and distributed AI platforms bring real-time AI insights where data is generated

This end-to-end solution approach eliminates silos and fragmentation in AI implementation, creating a consistent experience for users and administrators. The integrated infrastructure stack allows organizations to deploy the right AI capabilities in the right locations based on their specific requirements for performance, security, and cost.

Expanding Partner Ecosystem Enhances Versatility

Dell's ecosystem approach includes partnerships with NVIDIA, AMD, Intel, major model providers, and Red Hat, ensuring highly interoperable solutions that meet diverse customer needs. These partnerships provide flexibility while maintaining the performance and security advantages of Dell's hardware foundation.

Key ecosystem developments include:

The Dell AI Platform with AMD offers 200G storage networking and an upgraded ROCm open software stack, with Day 0 support for models like Llama 4

Dell's collaboration with Intel leverages Gaudi 3 AI accelerators for cost-effective AI infrastructure

Red Hat integration provides flexibility and security with a business-critical AI software stack

This ecosystem approach ensures customers can choose optimal configurations based on their specific workload requirements, budget constraints, and existing technology investments. It creates a future-proof foundation that can evolve as AI technologies and business needs change.

Enhanced Security and Sovereignty for Regulated Industries

On-premises deployments ensure sensitive data remains within organizational boundaries, helping enterprises meet strict regulatory requirements in industries like healthcare, finance, and government. This addresses growing privacy concerns by maintaining control over data access and operations.

The Dell AI Factory approach reduces compliance risks associated with public cloud AI deployments while providing physical and logical security controls tailored to enterprise requirements. Organizations can customize security measures based on their specific policy needs and compliance obligations.

This focus on security and sovereignty is increasingly important as AI applications begin to process more sensitive data across organizations. Dell's approach provides the foundation for responsible AI deployment that protects both organizational and customer data.

Superior TCO for Enterprise AI Investments

Perhaps most compelling for cost-conscious organizations is Dell's ability to deliver up to 62% lower costs for LLM inferencing compared to public cloud providers. This maximizes ROI for enterprises investing in generative AI infrastructure while providing predictable costs without the usage-based pricing fluctuations common with cloud services.

The on-premises approach eliminates data egress fees common with cloud AI implementations and reduces dependency on expensive cloud connectivity for large AI datasets. It also allows for hardware reuse and repurposing as AI strategies evolve, creating a more sustainable approach to AI infrastructure investment.

For organizations committed to AI as a core strategic capability, Dell's approach provides both immediate cost advantages and long-term financial benefits through greater control over infrastructure spending and utilization.

Sources

blocksandfiles.com - Dell updates ObjectScale and PowerScale for AI NVIDIA style

crn.com - Michael Dell's boldest AI predictions from Dell Tech World

siliconangle.com - Welcome to the AI factory era: A preview of Dell Technologies World 2025

dell.com - Dell Technologies fuels enterprise AI innovation with infrastructure solutions and services